Abstract

01Introduction

The creation of depth and motion effects in static images has been a fundamental challenge in computer graphics and digital art. Traditional approaches often rely on depth maps, normal maps, or complex 3D reconstruction techniques. We propose an alternative method that generates compelling pseudo-depth effects through the strategic combination of flow field dynamics and adaptive mesh deformation.

Our approach draws inspiration from fluid dynamics visualization and computational geometry, leveraging the organic patterns of Perlin noise to create natural-looking displacement fields. By coupling this with brightness-based adaptive sampling and Delaunay triangulation, we achieve a system that responds intelligently to image content while maintaining computational efficiency.

02Theoretical Foundation

2.1 Brightness-Based Point Sampling

The foundation of our method lies in the intelligent sampling of points from the source image. Rather than uniform sampling, we employ a brightness-weighted stochastic process. For a given image \(I\) with dimensions \(W \times H\), the probability of selecting a point at position \((x, y)\) is given by:

where \(B(I(x, y))\) represents the brightness value at pixel \((x, y)\), \(B_{max}\) is the maximum possible brightness (typically 100 in HSB space), and \(\rho\) is a user-defined density parameter controlling the overall sampling rate.

2.2 Delaunay Triangulation

Given the set of sampled points \(\mathcal{P} = \{p_1, p_2, ..., p_n\}\), we construct a Delaunay triangulation \(\mathcal{T}\). The Delaunay condition ensures that no point in \(\mathcal{P}\) lies inside the circumcircle of any triangle in \(\mathcal{T}\), mathematically expressed as:

where \(V(t)\) denotes the vertices of triangle \(t\). This property maximizes the minimum angle of all triangles, avoiding sliver triangles and ensuring mesh quality.

2.3 Flow Field Generation

The displacement field is generated using 3D Perlin noise, creating smooth, continuous gradients. For each point \((x, y)\) at time \(t\), the flow field angle \(\theta\) is computed as:

where \(z\) is the zoom factor controlling the spatial frequency of the noise, \(\tau\) is the temporal evolution rate, and \(\eta\) is a scaling factor (typically 2) allowing for multiple rotations.

03Displacement Algorithm

Algorithm 1: Triangle Displacement and Rendering

a. Sample noise: \(n_i = \text{Perlin}(v_i.x \cdot z, v_i.y \cdot z, t)\)

b. Compute angle: \(\theta_i = 2\pi \cdot 2 \cdot n_i\)

c. Get brightness: \(b_i = B(I(v_i.x, v_i.y)) / 100\)

d. Calculate magnitude: \(m_i = \delta \cdot b_i\)

e. Displace vertex: \(v'_i = v_i + m_i \cdot [\cos(\theta_i), \sin(\theta_i)]^T\)

3.1 Vertex Displacement

For each vertex \(v\) in a triangle, the displacement vector \(\vec{d}\) is calculated as:

where the magnitude function \(M(v)\) is defined as:

Here, \(\delta\) represents the maximum displacement amount, creating a brightness-modulated displacement field where brighter regions experience greater deformation.

3.2 Dynamic Color Transformation

Beyond geometric displacement, we apply sophisticated color transformations in HSB (Hue, Saturation, Brightness) space to enhance the perception of depth and motion.

3.2.1 Hue Shift

The hue component undergoes a shift based on the average noise value across the triangle's vertices:

where the hue shift \(\Delta H\) is computed as:

This creates a color warping effect that follows the flow field patterns, with \(h_s\) controlling the maximum hue shift amplitude.

3.2.2 Saturation Fade

To simulate atmospheric perspective and depth cues, we implement displacement-proportional saturation reduction:

where \(\bar{m}\) is the average displacement magnitude across the triangle's vertices, and \(s_f\) controls the fade intensity. This creates a desaturation effect for highly displaced regions, mimicking depth-based color fading.

04Implementation Details

4.1 Optimization Strategies

The algorithm achieves real-time performance through several key optimizations:

- Spatial Coherence: The Delaunay triangulation is computed once per parameter change, avoiding redundant geometric computations.

- Temporal Coherence: Perlin noise evaluation uses incremental time steps, maintaining smooth animation without recalculating the entire field.

- Brightness Caching: Image brightness values are pre-computed and stored during the sampling phase, eliminating redundant pixel reads.

- Triangle Culling: Triangles with all vertices outside the viewport are skipped, reducing rendering overhead.

4.2 Parameter Space

The algorithm exposes five primary parameters that control the visual output:

ρ (Density)

δ (Displacement)

z (Zoom)

h_s (Hue Shift)

s_f (Saturation)

05Results and Analysis

5.1 Visual Characteristics

The algorithm produces several distinctive visual effects:

- Organic Flow Patterns: The Perlin noise field creates natural, fluid-like distortions that avoid the mechanical appearance of linear transformations.

- Feature Preservation: Brightness-based sampling ensures that important image features (typically brighter regions) maintain higher triangle density, preserving detail where it matters most.

- Depth Illusion: The combination of geometric displacement and color transformations creates a compelling pseudo-3D effect, with displaced regions appearing to float above or sink below the image plane.

- Temporal Dynamics: The time-evolving noise field produces smooth, hypnotic animations that suggest organic movement or breathing effects.

5.2 Performance Metrics

| Operation | Complexity | Typical Time | Notes |

|---|---|---|---|

| Point Sampling | O(n) |

~5ms | n = sampled points |

| Delaunay Triangulation | O(n log n) |

~20ms | Divide-and-conquer |

| Per-frame Rendering | O(t) |

~10ms | t = triangle count |

| Frame Rate | - | 30-60 FPS | 800×800px, moderate density |

06Applications

The presented algorithm finds applications in various domains:

- Digital Art and Design: Creating unique visual effects for artistic expression and generative art projects.

- Motion Graphics: Generating dynamic backgrounds and transitions for video production.

- Data Visualization: Applying the technique to scientific visualization for emphasizing data patterns through controlled distortion.

- Interactive Media: Real-time image effects for games, installations, and interactive experiences.

- Glitch Art: Controlled corruption and distortion effects for aesthetic purposes.

07Future Work

Several avenues for future research and development present themselves:

- Multi-scale Flow Fields: Implementing octave-based noise with varying frequencies to create more complex displacement patterns.

- Adaptive Refinement: Dynamic triangle subdivision based on local image complexity or user interaction.

- GPU Acceleration: Implementing the algorithm using WebGL or compute shaders for improved performance.

- Machine Learning Integration: Using neural networks to learn optimal displacement patterns based on image content.

- 3D Extension: Extending the technique to true 3D space with depth map generation from single images.

08Conclusion

We have presented a novel approach to image displacement mapping that combines flow field dynamics with adaptive triangulation to create compelling visual effects. The method's key innovations include brightness-weighted sampling for intelligent mesh generation, Perlin noise-based flow fields for organic displacement patterns, and sophisticated color transformations that enhance depth perception.

The algorithm's real-time performance and intuitive parameter space make it suitable for both artistic applications and technical visualization tasks. By bridging computational geometry, fluid dynamics, and digital art, this work demonstrates the potential for cross-disciplinary approaches in creating new forms of visual expression.

The open-source implementation and web-based deployment ensure accessibility for artists, designers, and researchers, fostering further exploration and development of flow field-based visualization techniques.

09References

- Perlin, K. (1985). An image synthesizer. ACM SIGGRAPH Computer Graphics, 19(3), 287-296.

- de Berg, M., Cheong, O., van Kreveld, M., & Overmars, M. (2008). Computational Geometry: Algorithms and Applications. Springer-Verlag.

- Shewchuk, J. R. (1996). Triangle: Engineering a 2D quality mesh generator and Delaunay triangulator. Applied Computational Geometry, 1148, 203-222.

- Bridson, R. (2007). Fast Poisson disk sampling in arbitrary dimensions. SIGGRAPH Sketches, 10(1), 1.

- Turk, G. (1991). Generating textures on arbitrary surfaces using reaction-diffusion. ACM SIGGRAPH Computer Graphics, 25(4), 289-298.

- Cook, R. L. (1986). Stochastic sampling in computer graphics. ACM Transactions on Graphics, 5(1), 51-72.

- Lee, D. T., & Schachter, B. J. (1980). Two algorithms for constructing a Delaunay triangulation. International Journal of Computer & Information Sciences, 9(3), 219-242.

Interactive Flow Field Implementation

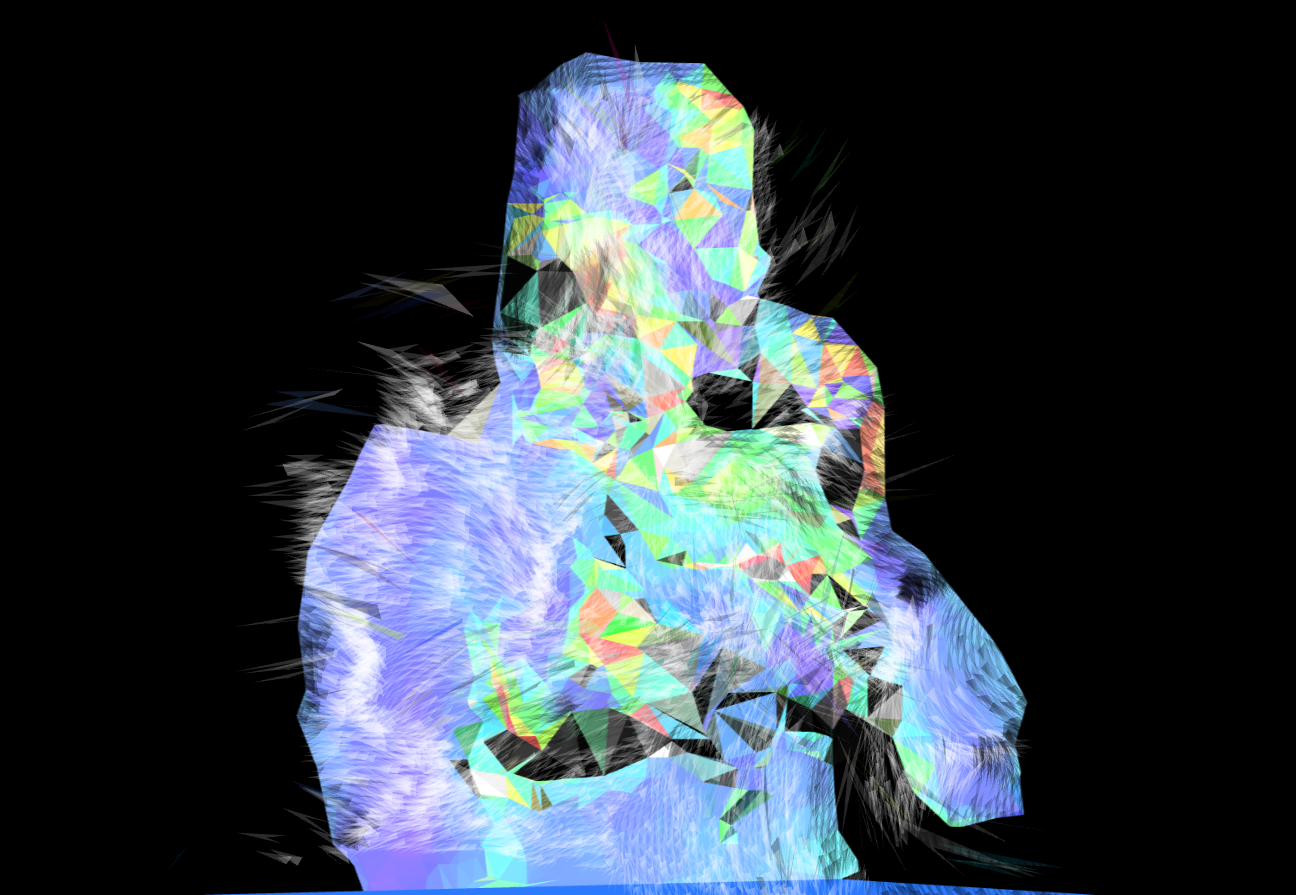

Outputs from Some Initial Experiments exploring the Parametric Space